Best AI Image Detectors to Verify Visual Content

Remember that photo of Pope Francis strolling down the street in a puffer jacket? Thousands shared it. News sites reported it. People believed it.

One problem- it never happened. That image was 100% AI-generated. And it fooled millions.

We are living in a world where seeing isn’t believing. Anyone can create hyper-realistic images in seconds with AI photo generators like MidJourney, DALL·E, and Stable Diffusion. This isn’t merely a tale of sleek tech; it’s a matter of trust.

Fake images spread lies quickly in journalism, education, law enforcement, and our personal lives. They often outpace the truth. So how do you tell what’s real? That’s where AI image detectors come in.

Well! In this post, we tested over a dozen tools and narrowed them down to the top 11 AI image detection tools that are currently effective. No fluff or hype. Just real tools, tested by a human, for humans who want to stay ahead of the fakes.

Let’s dive in.

The Top 11 AI Image Detectors (The Ultimate Showdown)

AI-generated images are now common, and spotting them can be tougher. These 11 provide quick, accurate results for real-world use. Whether you are a journalist, educator, or just tired of being tricked, these detectors will help you distinguish real from fake—one image at a time.

- Hive AI Image Detector

- Sensity AI

- Content Credentials (C2PA)

- Google’s SynthID

- Decopy AI Image Detector

- Sightengine AI Image Detector

- Undetectable AI

- AI Detector

- Adobe Content Authenticity

- Forensically (by FourMatch)

- GLM (Giant Language Model Test Site)

1. Hive AI Image Detector

Hive leads the way in AI image detection, delivering unmatched precision. Perfect for examining creations from AI generators like DALL·E, Flux, and MidJourney. Achieve real-time results effortlessly, thanks to batch uploads and a robust API. Rely on Hive, trusted by media platforms and social networks, to verify your content with ease and flexibility.

Best For: Enterprises, content platforms, and safety teams

The Catch: Free plan is limited; full features require a paid subscription

2. Sensity AI

Sensity AI keeps a vigilant eye on the web’s digital wilderness. It sniffs out deepfakes and AI-crafted faces lurking in social spaces, marketplaces, and forums. With automated alerts and precise risk scoring, it illuminates potential threats. Security teams rely on its insights to thwart brand impersonation and fraud. Powered by AI trained on millions of samples, it boasts remarkable accuracy.

Best For: Security teams, brand protection, and fraud prevention

The Catch: Focuses mostly on faces — not ideal for landscapes or abstract art

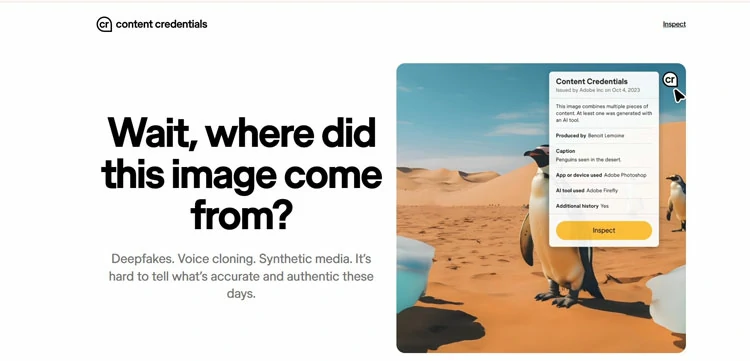

3. Content Credentials (C2PA)

Backed by Adobe, Microsoft, and Intel, C2PA is a new open standard that embeds tamper-proof metadata into images. It shows origin, editing history, and if AI was involved — like a nutrition label for visuals. Works with Adobe apps and some cameras. A promising step toward universal content trust.

Best For: Photographers, publishers, and ethical creators

The Catch: Only works if the device or app supports it — adoption is still growing

4. Google’s SynthID

SynthID is Google’s invisible watermarking tech for AI-generated images. It embeds a digital signal directly into the pixels — undetectable to the eye but readable by software. Works even after cropping, resizing, or filtering. Still in pilot mode with select partners, but could become a global standard for AI content labeling.

Best For: Future-proofing AI content and trust-building

The Catch: Not publicly available yet — limited to enterprise testing

5. Decopy AI Image Detector

Decopy’s AI Image Detector is a fast, free tool that tells you if an image was made by an AI — no signups, no paywalls. Upload any photo and get results in seconds. It scans for subtle digital clues that only synthetic images leave behind. Built on a massive training set of millions of images, it delivers high accuracy and clear confidence scores.

Best For: Students, teachers, and everyday users who want a quick, free, no-strings-attached check.

The Catch: Lacks API or batch processing — not ideal for developers or large-scale use.

6. Sightengine AI Image Detector

Sightengine isn’t just another AI detector — it’s a powerhouse built for businesses that need to verify thousands of images fast. It analyzes pixel-level patterns to catch AI-generated content from different popular sources. No uploads to sketchy sites. Just solid, API-driven detection with 99% accuracy in independent tests.

Best For: Developers, platforms, and companies fighting fraud, fake profiles, or misinformation at scale.

The Catch: Not beginner-friendly; you’ll need coding skills to unlock its full power.

7. Undetectable AI

Undetectable AI offers a straightforward tool that detects AI-generated and altered images accurately. The interface is very user-friendly: upload your image, wait a few seconds, and receive a clear result. You don’t need any tech skills. It also values your privacy, as no images are stored.

Best For: Everyday users, teachers, journalists, and creators who want fast, private, reliable checks.

The Catch: Lacks API or batch processing — not built for large-scale or automated use.

8. AI Detector

AI Detector isn’t just for text. Its AI image detection tool is great for professionals needing quick, reliable verification. You receive a clear confidence score in seconds. The interface is clean and free of technical jargon. It works with its strong text detection and humanization tools, making it a one-stop shop for content authenticity.

Best For: Educators, editors, publishers, and professionals fighting AI fraud.

The Catch: Image detection is strong, but less detailed than forensic tools like Forensically.

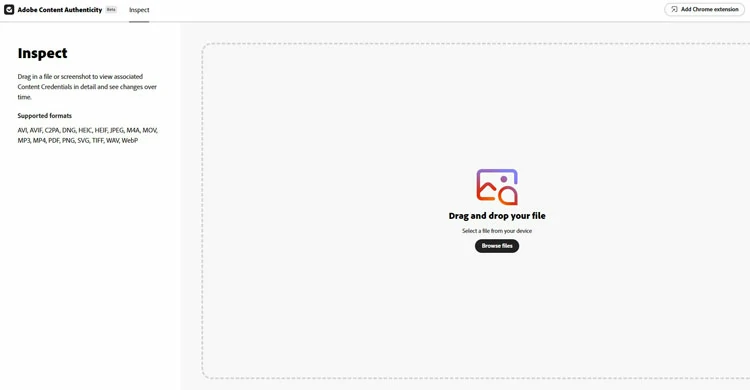

9. Adobe Content Authenticity

Built into Photoshop and Adobe Firefly, this tool automatically tags AI-generated or edited images with verifiable metadata. Shows who made it, when, and what was changed. Users can choose to sign their work with a digital identity. Transparent, secure, and part of Adobe’s push for ethical AI.

Best For: Designers, marketers, and creative professionals

The Catch: Only works within Adobe’s ecosystem — not universal

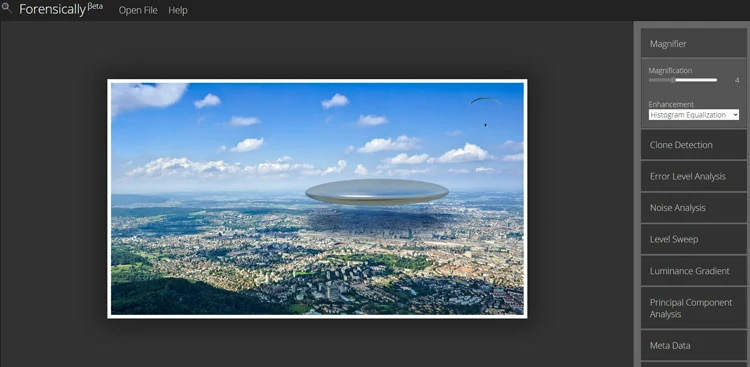

10. Forensically (by FourMatch)

Forensically is a free, powerful desktop tool for deep image analysis — no uploads, so your files stay private. It helps detect fake images, lighting mismatches, and sensor noise anomalies. Perfect for spotting tampering in photos used in legal cases or news reports. Packed with forensic tools pros use, but free for anyone. Runs in-browser and works offline.

Best For: Investigators, fact-checkers, and privacy-conscious users

The Catch: Steeper learning curve; not intuitive for beginners

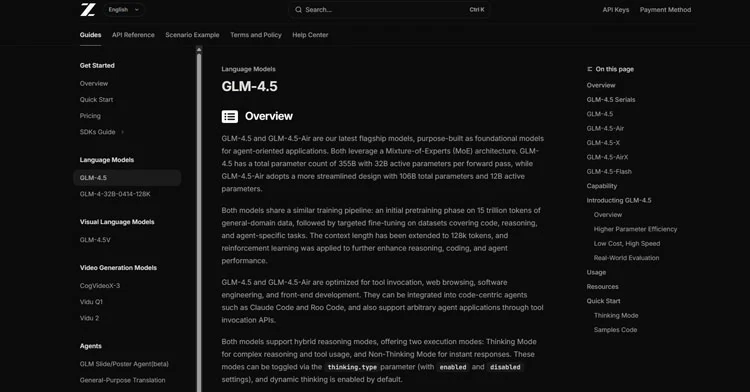

11. GLM (Giant Language Model Test Site)

Don’t let the name fool you — GLM’s image detector is surprisingly solid. Upload a photo and get a detailed AI probability score. Uses cross-modal analysis, checking both pixels and context clues. Free, fast, and no signup needed. A hidden gem for casual users and researchers.

Best For: Students, curious testers, and budget-conscious users

The Catch: Outdated interface; unclear how often models are updated

Quick Tip: No single tool is perfect. For best results, run suspicious images through 2–3 detectors and compare. AI fakes evolve fast; your verification strategy should too.

How to Use These Tools Like a Pro (Even If You’re Not a Tech Expert)

You don’t need a PhD in AI to spot a fake image. But if you’re just uploading a photo and blindly trusting the first “AI-generated” result you see, you’re doing it wrong.

Here’s how the pros really use these tools- fast, accurate, and without getting fooled.

Step 1: Don’t Rely on Just One Tool

No AI detector is perfect. Each uses different models, training data, and logic.

Smart move: Run the same image through 2–3 detectors.

Try:

- Decopy.ai (free, fast, great first check)

- AI Detector.com (if you also want to check accompanying text)

- Undetectable.ai (clean, private, reliable second opinion)

If all three say “AI,” it’s almost certainly fake. If results conflict? That’s a red flag. Dig deeper.

Step 2: Pair Detection with Reverse Image Search

AI detectors tell you how it was made. Reverse search tells you where it’s been.

Do this:

- Upload the image to Google Images (click the camera icon).

- See if it appears on AI art galleries, stock sites, or forums.

Found the same image on a MidJourney fan page? Case closed.

Step 3: Look for the “Invisible” Clues (Even If the Tool Misses Them)

AI leaves behind subtle tells. Train your eye:

– Weird textures: Skin that looks too smooth, like plastic.

– Impossible geometry: Extra fingers, mismatched earrings, warped hands.

– Background glitches: Trees growing out of heads, blurry objects that don’t fit.

– Lighting mismatches: Multiple light sources or shadows going in different directions.

Step 4: Use the Right Tool for Your Goal

Not all detectors are built the same. Match the tool to your mission.

|

Your Goal |

Best Tool |

| Quick personal check | Decopy.ai or Undetectable.ai (free, no login) |

| Student assignment verification | AI Detector.com (checks text + images together) |

| Professional reporting or journalism | Combine Forensically + AI Detector for full analysis |

| Business scanning thousands of images | Sightengine (API, scalable, enterprise-grade) |

Step 5: Understand the Limits

Even the best tools can be fooled — especially by:

– Heavily edited AI images (cropped, filtered, enhanced)

– Low-resolution uploads (detectors need detail)

– New or niche AI models (not yet in training data)

Step 6: Protect Privacy Like a Pro

Never upload sensitive images to sketchy sites. Stick to tools that promise not to store your data:

- Decopy.ai: No login, no storage.

- Undetectable.ai: Explicitly states images are deleted.

- Sightengine: Enterprise-grade privacy, no human review.

Avoid tools that require personal info for a one-time scan.

Real-World Pro Tip: The 3-Minute Verification Routine

Here’s what I do when I see a suspicious image:

- 0:00–0:30 → Upload to Decopy.ai for a free, instant AI check.

- 0:30–1:00 → Run it through Google Reverse Image Search.

- 1:00–2:00 → Check for visual glitches (hands, shadows, textures).

- 2:00–3:00 → Confirm with AI Detector.com or Undetectable.ai.

In under 3 minutes, you’ll know if it’s real — with 95%+ confidence.

The Future of Image Authenticity- Truth in the Age of AI

We’re at a turning point. A single AI-generated image can spark a news story, tank a stock, or ruin a reputation. So what happens next?

The future of image authenticity isn’t about one tool. It’s about a new digital trust ecosystem, built on transparency, technology, and shared responsibility.

1. The Rise of “Provenance Tech”

Imagine every image coming with a digital birth certificate.

That’s the promise of provenance standards like C2PA (the same tech behind Adobe’s Content Credentials). It embeds invisible, tamper-proof metadata into an image at the moment it’s created — showing:

– Was AI involved?

– Has it been edited?

– Where and when was it taken?

This isn’t sci-fi. It’s already live in Adobe apps, some smartphones, and Google’s Pixel 8. In the future, your phone camera may tag real photos as “human-made.” At the same time, AI tools could label their outputs as synthetic by default.

2. Invisible Watermarks (Like Google’s SynthID)

Even with provenance, bad actors will strip metadata and repost fakes. That’s why the next frontier is digital watermarking, like Google’s SynthID.

It subtly alters pixels in a way that’s invisible to humans but detectable by software. Think of it as a DNA tag for AI images — embedded at creation, survives cropping, filtering, and compression.

When combined with provenance, this creates a powerful one-two punch:

- This image was made by AI.

- And here’s the proof, even if someone tries to hide it.

3. Detection Becomes Built-In

Soon, you won’t need to visit a separate website to check an image.

AI detection will be baked into the tools you already use:

- Browsers (Chrome, Safari) will flag suspicious images.

- Social platforms (X, Facebook, TikTok) will label AI content in feeds.

- Search engines will filter or tag AI-generated results.

- Email clients will warn you about fake invoices or deepfake attachments.

4. Real-Time Monitoring at Scale

For companies and governments, the future is AI-powered monitoring dashboards. Tools like Sightengine let organisations scan millions of images in real time. They can flag fake IDs, deepfakes, or fraudulent insurance claims before any harm happens. In the next 5 years, these systems will:

– Predict viral misinformation before it spreads.

– Track impersonation campaigns across platforms.

– Alert brands when their CEO’s face is used in a fake ad.

5. Human + Machine = The Best Defense

Here’s the truth: No AI detector will ever be 100% accurate. But you, combined with smart tools, come close. The future belongs to people who know how to:

- Use detectors wisely.

- Spot visual red flags (weird hands, bad shadows).

- Run reverse image searches.

- Question viral content before sharing.

To Conclude: Trust, But Verify

We’ve reached a point where- A single image can lie louder than a thousand words.

The line between truth and fiction is disappearing quickly. This includes fake news, fraudulent insurance claims, deepfake scandals, and AI-generated art sold as real.

You can’t rely on your eyes anymore. You can’t trust a viral post, a celebrity photo, or even a “proof” screenshot without checking the facts. That’s why tools like Sightengine, AI Detector, Decopy.ai, and Undetectable.ai aren’t just helpful — they’re essential.

They’re the seatbelts in a high-speed digital world. But here’s the truth: No tool is perfect. AI evolves overnight. Detection plays catch-up. And sometimes, the answer is “uncertain.”