AI vs. Human Product Photo Retouching: What Our 2026 Head-to-Head Test Actually Found

AI retouching simplifies and accelerates image adjustments. But understanding its performance across real-world post-production use cases remains important.

So, to better understand how these tools perform in practice, we conducted a structured, head-to-head evaluation across multiple product photography categories. These included cosmetics, luxury goods, high-gloss materials, hair care products, detailed labels, and products featuring complex textures. We compared leading AI image models GPT Image 1, GPT Image 1.5, and Nano Banana Pro with CEI’s professional human retouchers. And each output was assessed across 11 performance fields that are crucial to real-world post-production workflows, allowing for a detailed and consistent comparison.

Here’s what we found from our latest study AI vs. Human Retouchers in product photography.

The results

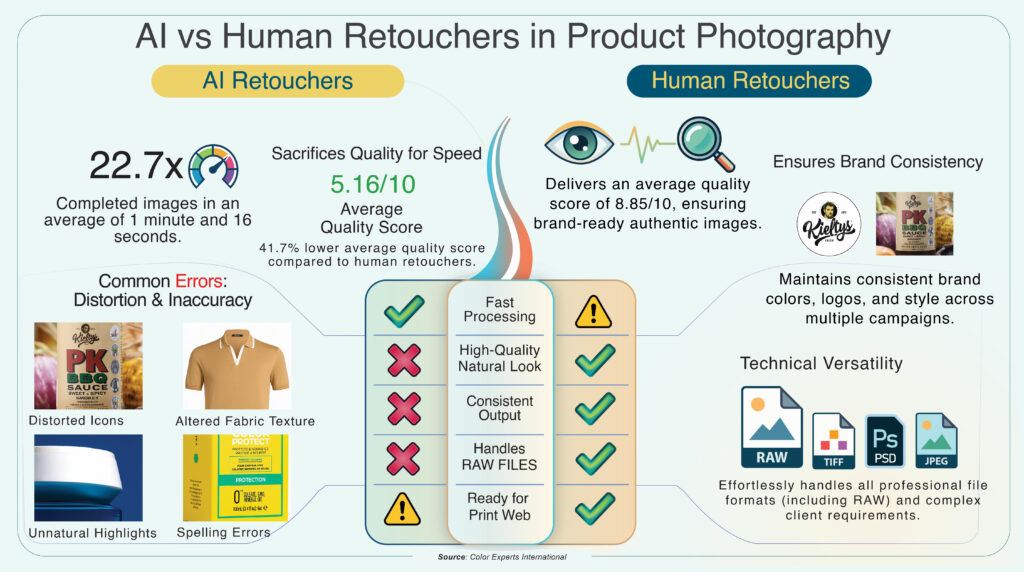

On speed, AI dominates. On quality, humans still set the baseline.

Across the full test set, AI was 22.7× faster on average, but delivered a 41.7% lower quality score than human retouchers.

Here are the averages:

- Human retouchers: 28.65 minutes, 8.85/10

- GPT Image 1: 2.45 minutes, 4.14/10

- GPT Image 1.5: 0.91 minutes (54.5 seconds), 5.35/10

- Nano Banana Pro: 0.42 minutes (25.4 seconds), 6.00/10

Nano Banana Pro was the fastest and the highest-scoring AI model in our study. But even the best AI option still fell short of human consistency and production readiness.

Where AI did well

AI performed best when the job was predictable. If the image was simple, and the requested edits didn’t require precision judgment, AI outputs could look decent quickly. This is why AI feels “good enough” in many quick tests, especially when you only review a single image.

In practical terms, AI worked better for early-stage outputs, fast previews, or basic cleanup where absolute accuracy is not critical.

Where AI struggled (and why it matters)

AI didn’t just score lower. It failed in ways that create real business risk, especially for ecommerce and brand-heavy packaging.

Label and text accuracy was one of the biggest issues. In several outputs, AI distorted typography, altered small text, or created inconsistent logo-like details. That’s a problem because packaging is not decoration. It’s product information. If the label changes, the image becomes untrustworthy.

Reflections and highlights often looked synthetic. Instead of removing glare cleanly and preserving surface realism, AI sometimes introduced an “AI polish” look that flattened textures, changed material behavior, or made products feel less real. Humans were more reliable at removing distractions without breaking the product’s natural lighting logic.

Authenticity and geometry were also weak points. Even slight shape warping, softened edges, or missing micro-texture can make a product look off. A customer may not explain why they feel unsure, but they’ll hesitate. That hits conversion.

Lack of Consistency. AI results varied wildly depending on image type and prompt attempt. Humans stayed stable across the categories and performance fields. That difference matters most when you are producing a catalog or a full campaign. You can’t ship “some images look perfect and some look weird” and call it consistent branding.

Technical versatility

This was one of the most important practical findings. When formats were unsupported (for example, some RAW or high-resolution TIFF cases), AI tools could fail to interpret the input image correctly. In extreme cases, the model may behave as if it didn’t receive the image properly, producing irrelevant or unreliable results.

Human retouchers don’t have this weakness. They work inside professional pipelines where RAW, TIFF, PSD, and layered workflows are normal.

If your workflow depends on pro formats, reliability is not optional. It’s the difference between “we can scale this” and “we just created a rework machine.”

Hybrid isn’t hype, but it’s not automatic either

We also looked at hybrid workflows (AI + human). The honest takeaway is that hybrid works only when the AI output is reusable. If AI introduces distortions, artifacts, or label issues, the editor wastes time fixing mistakes that shouldn’t exist.

When hybrid worked, it saved meaningful time. In one test case (hair and cosmetic care product), the hybrid workflow reduced total time from 10.00 minutes to 7.18 minutes, which is 28.2% faster, while still meeting production standards because humans handled the final accuracy and QC.

Hybrid is not “replace humans.” It’s “use AI where it behaves reliably, then let humans finalize, correct, and standardize.”

What this means for brands in 2026

AI is already useful, especially as a speed layer. But speed alone doesn’t make an image shippable. Production means: accurate labels, natural materials, stable geometry, and consistent results across large sets.

So the practical conclusion from our head-to-head test is simple: human retouchers win on quality and precision, while AI wins on speed. But ultimately, it’s not about who wins because AI is unlocking new possibilities across creative workflows and beyond, making it essential to harness the new era of technology into our dai ly workflows.

Insights

AI vs. Human Retouchers in Product Photography - Full Study